Adding Credibility: Creating Custom Content in R - openxlsx

This article is the second in a multi part series on creating custom content using R. You can find the first article, on ggplot2, here. Full code will be made available on Github.

Charts, as explored in our first Adding Credibility article, can make data accessible to and interpretable by a large population. A significant portion of this population may want to dive deeper, but do not have the technical expertise to analyze data in R. Fortunately, the R ecosystem has some great tooling to make data available in .xlsx and .csv format.

This article assumes a basic understanding of R and the Tidyverse. For more information on R and RStudio, Matt Dancho’s Business Science University provides phenomenal resources. In addition, my colleague and mentor Jonathan Regenstein has written extensively on financial analysis in R.

Today, we are going to focus on the openxlsx package. This package allows us to make data available to more users, particularly those with backgrounds in finance, accounting, and other Excel-heavy fields. Openxlsx can help us to display dataframes in clearly formatted and labeled spreadsheets. Our example will utilize data from two different sources that I use in a forthcoming article on housing supply. We will spend some more time with fredr and introduce Business and Industry data from the Census Bureau. As always, the code is available on my Github in the custom-content repository.

Building on the first article in the Adding Credibility series, we want to include data from the Federal Reserve in our dataset for export. In particular we want to look at monthly single-family housing starts. For clarity we filter to all observations after January 1, 2000.

Our units are expressed in thousands so we use a mutate to true up our data. While this step may be unnecessary for graphing due to label conventions, we want users of our data to be working with accurate information. Additionally, the value name native to FRED tables is not descriptive. We will rename our value field below.

housing_starts <- fredr_series_observations(series_id = "HOUST1F") %>%

select(date,value) %>%

filter(date >= start_period) %>%

mutate(value = value * 1000) %>%

rename("sfr_starts" = value)This is a great start, but we must be cognizant of our end users’ needs. Many will want macroeconomic context. Let’s pull a standard metric for the cost of capital, the Federal Funds Effective Rate, to help our readers out.

This is a pretty easy data pull, and fortunately for us is updated with the same frequency as our Housing Starts data. This facilitates a simple left_join to create our master dataset. Since our date column has consistent naming we do not need to specify anything for the left_join by argument.

macro_master <- housing_starts %>%

left_join(fed_funds)The next data field we need in our analysis is more complex. We want to analyze the Average Length of Time from Start to Completion of New Privately Owned Residential Buildings dataset from the Business and Industry section of the Census Bureau’s site. The data itself is easy to work with, but it is both stored as an Excel workbook and is only measured on a monthly basis. Here we will leverage the tidyverse’s readr package.

For better or worse, the Census report is a little clunky upon export. We will need to perform a few manipulations once we read in the data. This guarantees that we have a dataframe that we can both provide to readers and work with programmatically. This sort of work takes trial and error and may require adjustments before you repurpose for your own data analysis.

To instantiate our dataframe we need to load the readxl package. This lets us load the data from Excel into our R environment. The code below allows us to read the .xls to a temporary destination that we then load into R.

url <- "https://www.census.gov/construction/nrc/xls/avg_starttocomp_cust.xls"

destfile <- "avg_starttocomp_cust.xls"

curl::curl_download(url,destfile)

construction_timing <- read_excel(destfile,skip = 7) %>%

clean_names() %>%

select(1:2) %>%

slice(-c(1:4)) %>%

slice(1:51) %>%

mutate(x1 = as.numeric(x1)) %>%

rename("date" = x1,

"months_to_complete" = total1) %>%

mutate(date = mdy(str_glue("01-01-{date}")))This data goes as far as 2021. The Census Bureau is expected to release 2022 data in March 2023. The aforementioned article will be updated accordingly.

How do we handle annual data on a monthly dataframe? When we left_join on our macro_master dataframe we are left with a ton of NAs. The tidyverse has planned for this exact contingency. We can use the fill function to fill values down our dataframe.

macro_master_w_reno <- macro_master %>%

left_join(construction_timing) %>%

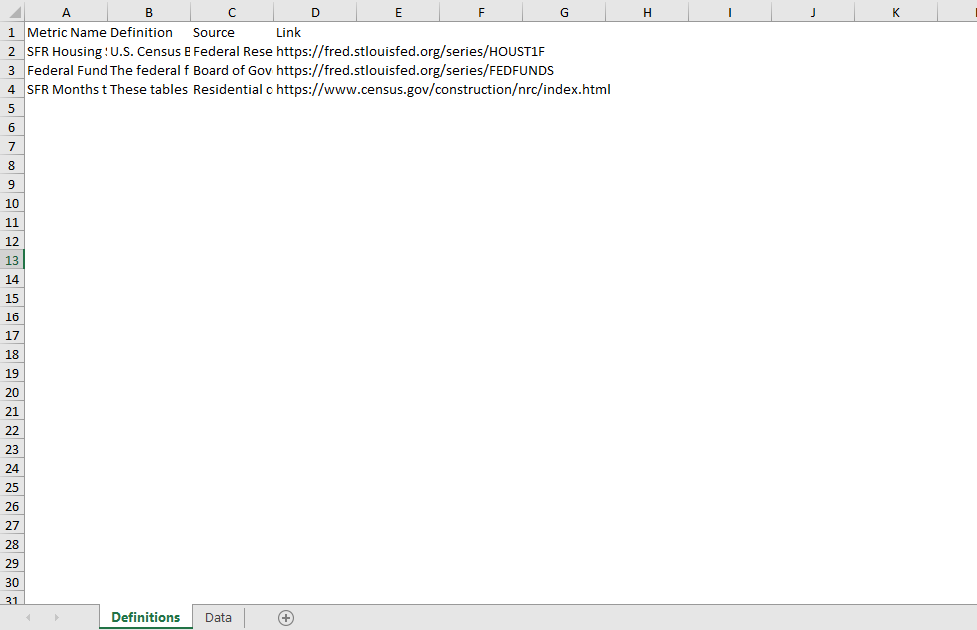

fill(months_to_complete)Now we have a dataframe with information that would be useful to any analyst of the housing market. Openxlsx will allow us to create a nicely formatted workbook to attach to our article. I like to include two tabs in any workbook with data for external use, a data tab and a definitions tab. To accomplish this we need to do two things; we need to give our macro_master_w_reno dataframe intuitive display names and we need to create a definitions dataframe.

macro_for_export <- macro_master_w_reno %>%

rename("Date" = date,

"SFR Housing Starts" = sfr_starts,

"Federal funds Rate" = fed_funds_rate,

"SFR Months to Complete Construction" = months_to_complete)Our definitions dataframe will contain both technical definitions and sourcing notes. This allows users to validate our work. Additionally, we want to give credit to the hard workers at the Census Bureau and Federal Reserve. Without their diligent research none of this would be possible. The code block to create our definitions is quite lengthy but can be accessed on my Github.

Openxlsx has a variety of functions that you can apply to the basic unit, a workbook object. I generally just name my workbook wb, but if you are creating multiple workbooks in a file you would want to be more specific in your naming conventions. We already know that our workbook will have two worksheets, named Definitions and Data. We have chosen to show gridlines on our Data tab but not on our Definitions tab. We can write the data from our existing dataframes and it will come through without formatting.

wb <- createWorkbook()

addWorksheet(wb,

sheetName = "Definitions",

gridLines = F)

addWorksheet(wb,

sheetName = "Data",

gridLines = T)

writeData(wb,

sheet = 1,

macro_definitions)

writeData(wb,

sheet = 2,

macro_for_export)This is sufficient for end-users if you are crunched for time. However, we are focused on adding credibility to our data analysis. Consistency is key to credibility and we want our formatting to mesh with other data assets such as GGPlots. The openxlsx package makes adding styles easy. Our color scheme and number formatting will be consistent with the work we did in the first article of this series. Below is an example of creating and adding our header_style to the Definitions tab.

header_style <- createStyle(textDecoration = "Bold",

fgFill = "#9BC2E6",

border = "bottom",

borderStyle = openxlsx_getOp("borderStyle","double"))

addStyle(wb,

sheet = 1,

style = header_style,

rows = 1,

cols = 1:4)If you take a look at the data attached to this article, the definitions are quite verbose. To fit everything on one page we must adjust our column widths. Openxlsx provides for this through the setColWidths function.

setColWidths(wb,

1,

cols = 1,

widths = 21)Finally, we have created and applied all the necessary formatting. The last step is to save our workbook. Please be sure to specify your desired file format (.xlsx)in the title. Our next article will focus on GTs, a package to create simple tables for display.

saveWorkbook(wb,"SFR Macro Data.xlsx",overwrite = T)You may download our final workbook here.

Alec A. Nathan is the Director of Research and Data Analytics at Sylvan Road Capital. A graduate of the University of Georgia, Alec specializes in real estate investment and data science. Please direct any inquiries to anathan@sylvanroad.com.